In recent years, the evolution of synthetic voice technologies has opened a Pandora's box of potential malicious uses, ranging from sophisticated financial frauds to the spread of disinformation through digital channels. The indistinguishability of cloned voices from real ones has necessitated the development of robust forensic methodologies capable of discerning authentic human speech from its AI-generated counterparts. This article delves into the cutting-edge techniques designed to distinguish real voices from cloned ones, offering insights into the efficacy of various feature extraction methods, from perceptual to spectral and end-to-end learned features.

The Forensic Challenge of Synthetic Voices

Synthetic voice cloning technologies have made significant strides, enabling the creation of highly naturalistic and identity-preserving vocal reproductions. These advancements pose serious challenges for digital security and integrity, as they can be weaponized for creating convincing deepfake audios and videos. Consequently, there is an urgent need for reliable methods to differentiate between real and synthetic voices, a task that has become central to the field of audio forensics.

Distinguishing Real from Cloned Voices: Techniques and Efficacy

Our exploration reveals three primary techniques for differentiating real voices from their synthetic clones:

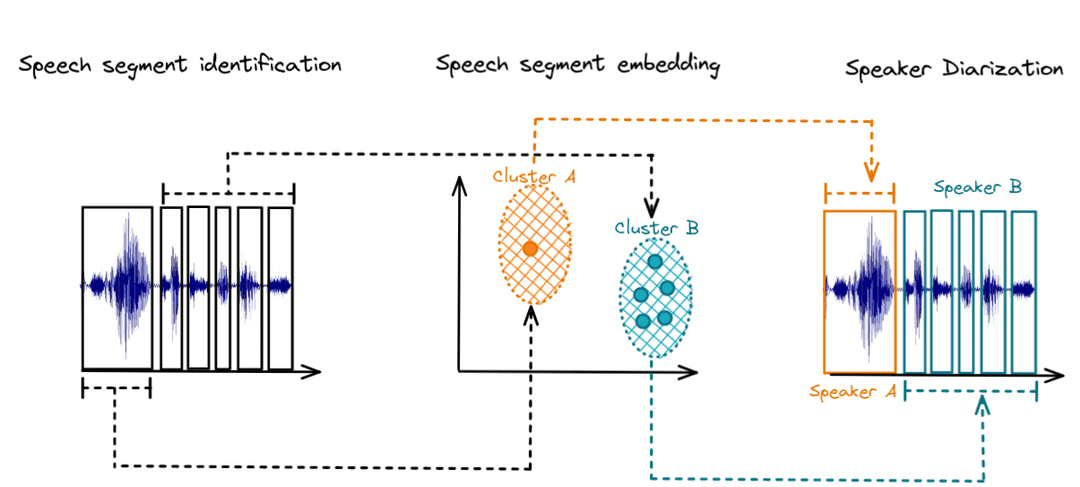

Low-Dimensional Perceptual Features: These features are highly interpretable but tend to offer lower accuracy in distinguishing real from cloned voices. Generic Spectral Features: These provide a broader analysis spectrum but still lag behind in accuracy when compared to more sophisticated methods. End-to-End Learned Features: Utilizing deep learning, this approach converts raw audio into high-dimensional embeddings, significantly outperforming other methods in accuracy. A key component of the success in the end-to-end learned features is the use of Pyannote Embedding, trained through end-to-end additive margin angular loss to enhance speaker identity separation in the latent space. By treating these 512-dimensional embeddings as features for classification, we achieve a marked improvement in identifying cloned voices.

Comparative Analysis and Findings

When pitted against one another, the end-to-end learned features consistently showcased superior performance, yielding an equal error rate (EER) between 0% and 4%, and proving robust even in the face of adversarial attacks. This stark contrast in efficacy is evident when comparing the average EER across single datasets: learned features virtually eliminated errors, while perceptual and spectral features lagged significantly behind.

The Growing Need for Audio Forensics

The advancements in voice cloning have far outpaced the development of corresponding forensic techniques, especially when compared to the progress made in image and video forensics. With synthetic voices now more natural and convincing than ever, the capability to create realistic deepfake content has surged, necessitating a robust forensic approach that can keep pace with these technological advancements.

Discussion and Future Directions

The differentiation between real and cloned voices is not just a technical challenge; it's a critical step in combating digital fraud and disinformation. While low-dimensional features offer the appeal of interpretability, the superior discrimination capabilities of end-to-end learned features make them a more effective choice for forensic analysis. However, the ongoing evolution of voice synthesis technologies necessitates continuous updates to these forensic techniques to ensure their effectiveness against newer synthesis architectures.

Moreover, the importance of developing both single- and multi-speaker detection techniques is underscored by the diverse nature of voice cloning applications. Single-speaker methods excel in identifying unique speech patterns, while multi-speaker approaches offer scalability to protect a broader spectrum of individuals.

Finally, as the digital landscape evolves, so too must our strategies for safeguarding authenticity. Encouraging the synthesis side of AI to incorporate imperceptible watermarks into generated content represents a proactive approach to mitigating misuse. Together with advanced forensic techniques, such measures will be crucial in maintaining the integrity of digital content in the face of increasingly sophisticated AI-generated deepfakes.

Accuracy

Training Embeddings on datasets from Playht, ElevenLabs, Coqui, Azure on a basic randomForests got an accuracy of 95%.

Helpful Resouces