Intro to Logic course teaching assistants often spend hours grading assignment submissions tediously making sure proofs are correct.

Imagine yourself in the shoes of a professor or a TA. You’re the professor in a class of 50 students and each student has one assignment. Each assignment is 10 lines of logic. That’s 500 lines of logic that a professor or a TA has to follow line to line to inorder to proof check a students work!

Sounds painful. Time to automate!

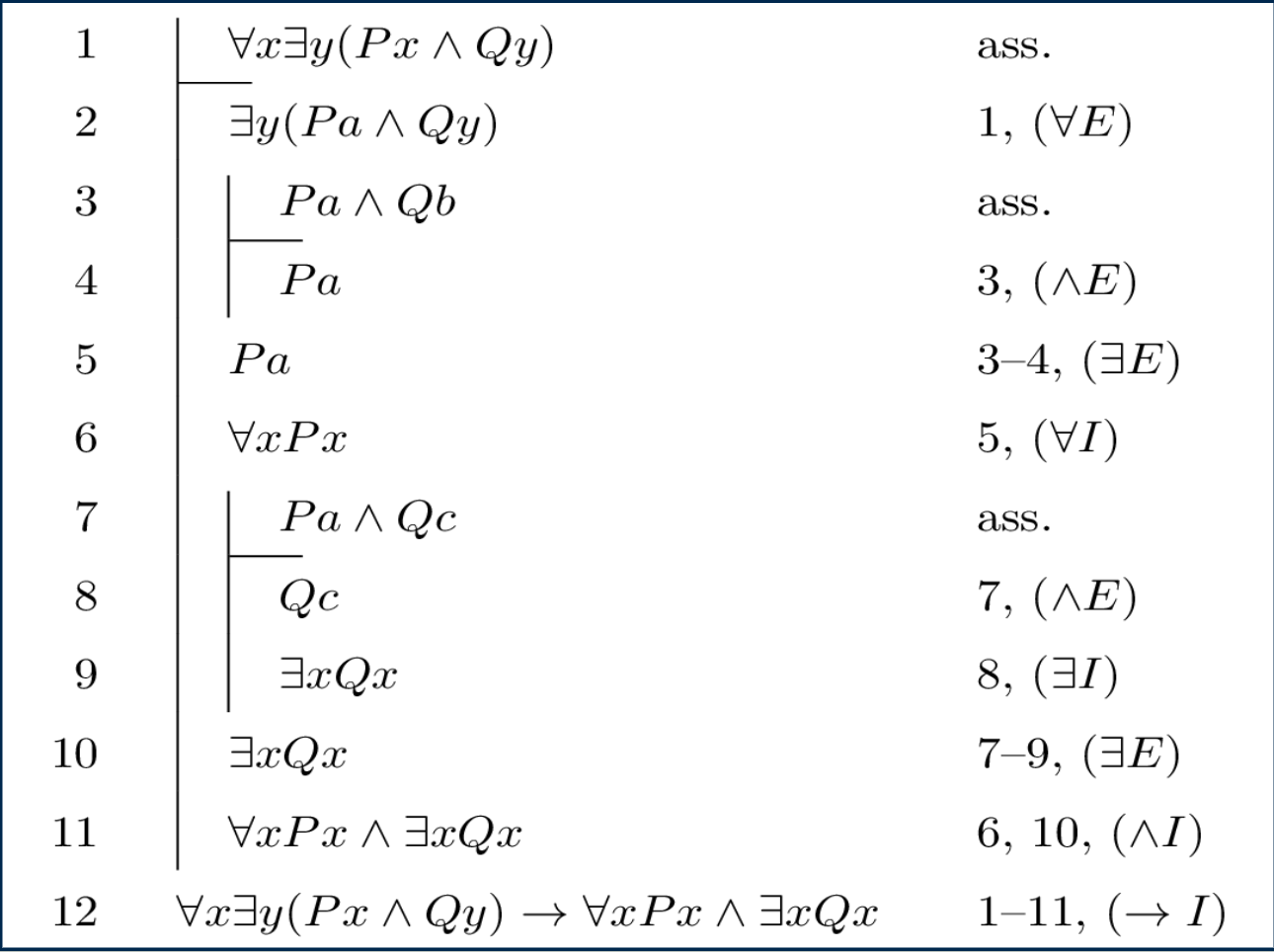

Our automated proof checker takes in a premise, conclusion, and checks if the user inputted proof steps that proves the conclusion given the premise(s) is correct or not.

Here’s a great primer on logic, in case you’re not familiar.

In this example, the student is trying to prove that if A is true, then ~(~A) (not not A) is also true (a simple proof example). The student enters the one line proof: “double negation”, clicks “check proof”, and the system congratulates the student on their correct submission.

Beyond simply enabling students to check proofs, we aimed to build a comprehensive system that enabled professors to create proof assignments, students to sign up with their college email id to view and solve assignments. As soon as a student successfully completes an assignment, the system marks that they’ve successfully completed the assignment, and stores their solution as well, in case the professor or teaching assistant wants to verify it.

Check out our live prototype at: openlogic.gautamtata.com.** We had a dozen of students use our proof checker this past semester at CSU-MB to check the validity of their assignments. Our system will be used as the defacto proof submission software for a class of size 70, in Fall 2019.**

-

12 used it for their class.

-

Will be launched Fall 2019 with CST 329 - Reasoning with Logic @ CSUMB totaling 70 students.

Motivation

My friend Jay and I were brainstorming what ideas to work on for our senior capstone project at CSU Monterey Bay. We started a conversation with a professor is going to teach a course on logic, among many other professors, in hopes that we could discover a concrete problem that we could work on.

I’d previously built other apps and tools, but failed to get significant traction. Looking back, my weakness was not validating an idea well enough before spending a lot of time developing it only to find out later that no one wanted to use it. It was frustrating to say the least, and I wanted to make sure I didn’t spend another valuable minute on building something no one found useful.

I was motivated to have as many conversations as needed with potential audiences to uncover real problems and draft up products that would actually solve those problems.

This professor we chatted with, Dr. Burns, was excited to share with us an annoying problem he had with his course on logic: it took way too long to grade these proof submissions and the teaching assistants were getting fed up. It’s one thing to ask teaching assistants to grade assignments that needed human intelligence to grade, but it can be frustrating for TAs to have professors give them dry, manual grading tasks that should be automated.

I was excited. I could feel the pain this professor and his TAs were feeling. And I could see a solution that would help ease their pain.

Jay and I had found our problem.

Development process

Unsurprisingly, Dr. Burns (the professor) already had a clear idea of what he wanted. He’d felt this problem for a while. We sat with him to clarify our understanding of the problem, understand the solution he had in his mind, and validate whether that solution direction was actually the best approach to tackle this problem.

We set up a weekly project sync to ensure that we were consistently building features according to what was needed, and so that we could run by any ideas we had during the week to get a sense for how useful they may be.

We also turned this project into our Capstone project, and were paired with two more students, turning us into a team of 4.

Audiences

We had 3 audiences in mind when developing this application:

-

Professors/teaching assistants

-

Students taking the Reasoning with Logic course at CSU Monterey Bay (taught by above professors and TAs).

-

Students learning about logic anywhere

While most of our functionality was targeted towards professors, TAs and their students, we didn’t want to simply show a landing page with words for people that weren’t students or professors taking this class at CSU-MB. Our vision was to let anyone from anywhere have immediate access to the proof checker without having to authenticate themselves.

For students at Monterey Bay, who are also enrolled in the Intro to Logic class, that signed up with their CSUMB email account, we provided functionality to:

-

download assignments

-

save progress on proofs

-

submit their assignments

Mistakes

**Pushing to use React: **Initially, I had wanted our team to use React.js for the front-end of this project. I was the only member of the team that was moderately familiar with this. Given the time constraints that we set for ourselves, it didn’t make sense for us to ramp up on React and use it for the project — especially given that we could just use off the shelf design systems like Bootstrap, etc. to get the job done. There was no complex user interface logic and state to manage (from a user interface standpoint), therefore React didn’t offer significant benefits over using an off the shelf Javascript UI library for our needs.

I wanted to use React because I wanted to get better at React, not because the product warranted it, or the team would work better in React. After many discussions with my team, and a bit introspection, I was able to get back on track to put our users and our products first, **NOT my own preferences on what tools and languages to build the product with. **I’m grateful for their patience.

**Pushing for Optical Character Recognition. **In the long-run, I would like our product to have an OCR component. I’m inspired by products like Photomath and Socrates for their ability to integrate seamlessly students’ natural workflow. As much as computers have taken over learning, I believe many students (especially in parts of the world where laptops and computers are scarce) would benefit tremendously from mobile-first solutions to their learning problems. In our use-case, I would love to see an app that processes hand-written proofs, and lets the user know whether the proof is valid.

But building an OCR based mobile proof checker is NOT close to what our initial product should be — to help our audience: professors, teaching assistants and students at CSU Monterey Bay. Our team was divided on whether to build an OCR based mobile app, or the web app that we ended up with, and we spent a few days discussing the trade-offs of each approach. Looking back, I feel that if I had argued from a user perspective: by working backwards from who we should serve, and building what they need, rather than thinking about what long-term direction to take — that sounds exciting, I could have more quickly aligned the team’s expectations, and finalized a product direction, saving us almost 3 days of time.

**Asking Users for Feedback: **We had a working prototype and were ready to receive feedback from students about how they felt about our tool, to help them do and submit their proofs assignments. We got a lot of “hey, this is really cool.” responses, but nothing tangible. We learned nothing. We didn’t have analytics set up yet when we soft launched our product, so we didn’t have visibility into whether these students were even using our app, or if they just took a look at it and then went back to watching cat videos on Youtube, and shot us a kind message to be nice. Looking back, I would integrate detailed analytics and I would have done in-person user interviews in which I’d sit down with a student and see how they’re walking through the product.

I was heavily inspired by this lecture by Emmet Shear’s on how to conduct user interviews:

Challenges

-

Maintaining Team Morale. I found it challenging to keep everyone on the team motivated. Jay and I were inspired to build a great product that would gain traction and would actually help people in a tangible way. **I failed to inject this enthusiasm and inspiration into the rest of the team. **Looking back, I wish I had proposed more team lunches or dinners, gotten to know our 2 other teammates better, understood what they were motivated by, and try to create an environment that would encourage them to contribute more.

-

**Unrealistic timeline. **We used the OpenLogic framework, to perform a fundamental part of our logic checking logic. We spent a lot more time than we had planned to learn how OpenLogic worked, so that we could build on top of it well.

-

**Effectively training the team on fundamental tools. **Not all members on the team were comfortable with using GIT, which led to some hairy collaboration issues that caused us to nuke our repo and spin up a new one. I should have better gauged our collective understanding of GIT, held training sessions for our team, and shared resources I found helpful to learn GIT, **before **we started committing code.

Code & Technology

We used MongoDB as our database, to store problems (that professors can upload), partial solutions, submissions, authenticated user tokens.

For authentication we used Passport.js. Signing up is only available for CSU Monterey Bay students for the time being.

Future Improvements

-

We want to better interface with iLearn (education platform that helps colleges manage their classroom functions: i.e. publish grades, assign homework, etc.). Our current solution is stand-alone and still requires the professor to inject student submissions made through our app into the iLearn platform so that they show up in the gradebook.

-

We want to expand to other schools. Intro to Logic is a general introductory class taught to Computer Science, Math and Philosophy majors through the country. We see an opportunity to serve this larger audience, and we have a lot of work to do. There are a variety of education software suites that colleges implement. We need to understand how to interface with each, and prioritize which ones to tackle first (most likely the platform that is used by most colleges, so that our solution will work off the bat for a large number of users).

-

The product itself has a lot of areas that can be fine-tuned. See my analysis of improvements to the existing design, below:

User Experience Improvement Areas

Real time form validation when signing up and logging in. Currently, we perform checks when the form is submitted, and show users an error message if they didn’t pass a check, through a pop-up dialogue. We currently throw this error the first time our code finds an error in the form, rather than aggregate all the errors and show them all to the user. This is a horrible user experience because a) users don’t know what all of the validations are up front, so even if they tackle an issue shown in the pop-up, they may have another issue that comes up after that, and b) they have to wait for a pop-up to tell them if their submission went through or not… this is 2019, not 2002. Users are much more accustomed to quick and seamless sign-up experiences.

Current pop-up based validation when a user submits the form:

Real-time validation feature that we’re rolling out soon:

Conclusion

See our project live at: openlogic.gautamtata.com.