SESR: Simultaneous Enhancement and Super-Resolution for Underwater Imagery

I've spent a lot of time working with underwater imagery. Previously, I built a computer vision system for detecting marine debris and experimented with GANs for generating synthetic underwater data. Through both projects, one thing became painfully clear: underwater images are terrible to work with.

The ocean doesn't cooperate with cameras. Light behaves differently underwater - colors shift toward blue-green, contrast drops off dramatically, and everything looks like it's been run through a blur filter. If you want to build reliable vision systems for underwater robots, you need to fix the images first.

That's what led me to contribute to SESR - a generative model that tackles enhancement and super-resolution simultaneously, designed specifically for the challenges of underwater imagery.

The Problem

Traditional approaches treat image enhancement and super-resolution as separate problems. You might run an enhancement algorithm to fix the colors, then a separate super-resolution model to increase detail. This works, but it's inefficient - and in the underwater domain, the two problems are deeply intertwined.

Underwater images suffer from a specific set of degradations:

- Color distortion: Light attenuates at different rates depending on wavelength. Red disappears first, leaving that characteristic blue-green cast.

- Low contrast: Particles in the water scatter light, reducing the difference between foreground and background.

- Blurriness: A combination of optical effects and the scattering medium makes everything soft.

These aren't independent issues. The color shift affects perceived contrast. The scattering that causes blur also affects color. Solving them together makes more sense than treating them in isolation.

The Architecture

SESR is built on a residual-in-residual dense network. If that sounds like a mouthful, here's the intuition: the network learns features at multiple scales and combines them hierarchically. This is particularly useful for underwater images because different types of degradation manifest at different scales.

The network has two parallel branches with different kernel sizes (3×3 and 5×5) that extract local features. These get fused into a global feature map that captures both fine details and broader context.

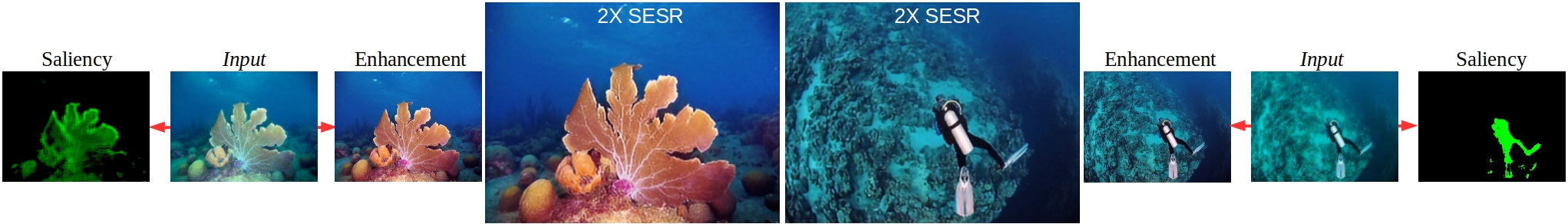

The clever part is the Auxiliary Attention Network (AAN), which predicts a saliency map for each image. This map identifies which regions matter most - typically the foreground objects you actually care about. The saliency prediction guides the enhancement process, ensuring the model focuses its efforts on the important parts of the scene rather than wasting capacity on empty water.

Training With Purpose

What makes SESR work is its multi-modal objective function. Instead of just minimizing pixel-wise error, the model is trained with losses that specifically target underwater degradations:

Saliency Loss teaches the model to correctly identify important regions in the image.

Contrast Loss specifically addresses the washed-out appearance of underwater scenes by focusing on foreground pixel intensity recovery.

Color Loss uses wavelength-dependent chrominance terms. This is key - it doesn't just compare colors, it understands that underwater color distortion follows specific physical patterns.

Sharpness Loss evaluates image gradients to recover edge detail lost to scattering.

Content Loss ensures high-level features match the ground truth by comparing against a pre-trained VGG-19 network.

This multi-pronged approach means the model isn't just learning to minimize some abstract error metric - it's learning to solve the specific problems that plague underwater imagery.

The model was trained on UFO-120, a dataset we introduced with 1500 training samples and 120 test samples of paired underwater images. Having a proper benchmark for this domain was long overdue.

Results

SESR outperforms existing methods on standard metrics - higher PSNR and SSIM scores, better UIQM (Underwater Image Quality Measure) performance. But the numbers don't fully capture the improvement. The enhanced images have more natural colors, better contrast, and sharper details.

Ablation studies confirmed what we suspected: the saliency-driven contrast loss is doing a lot of heavy lifting. Remove it, and contrast recovery drops significantly. The attention mechanism isn't just a nice-to-have - it's essential for guiding the enhancement toward what matters.

Real-Time Performance

Here's where things get practical. Underwater robots need to process images in real-time. A model that takes seconds per frame is useless for navigation or object detection.

SESR runs at 7.75 frames per second on an Nvidia AGX Xavier - a single-board computer you can actually deploy on a robot. The model's memory footprint is only 10 MB. This efficiency comes from design choices like skip connections and dense feature extraction that reduce computational load without sacrificing quality.

For a robot navigating murky waters, being able to enhance and upscale imagery on-the-fly transforms what's possible. Objects that would be invisible in raw footage become detectable. Navigation becomes reliable in conditions that would otherwise be impossible.

Beyond Underwater

Interestingly, SESR generalizes well to regular images too. Evaluated on standard benchmarks like Set5 and Set14, it achieves competitive PSNR and SSIM scores. The architecture and training approach aren't underwater-specific - they just happen to work particularly well there because of how the losses are designed.

This suggests the residual-in-residual approach with saliency guidance could be useful for a broader range of image restoration tasks. The underwater domain forced us to think carefully about what degradations we were solving, and that careful thinking paid off.

Conclusion

SESR represents a unified solution for underwater image enhancement and super-resolution. By combining these traditionally separate tasks and training with losses that specifically target underwater degradations, we achieved state-of-the-art results while maintaining real-time performance.

For anyone building underwater vision systems, the takeaway is that treating enhancement and super-resolution together isn't just more elegant - it's more effective. The UFO-120 dataset provides a benchmark for future work, and the architecture offers a template for domain-specific image restoration.

The underwater world is still hard to photograph, but at least now we can do something about the images we get.